AI Ethics and Governance: Building Responsible AI Systems in a Rapidly Evolving Tech Landscape

Introduction

As we stand on the brink of a new technological era, artificial intelligence (AI) is not just a tool but a catalyst for profound change across various sectors. According to a recent report, AI could contribute up to $15.7 trillion to the global economy by 2030. AI’s impact is both vast and deep. However, this rapid advancement is accompanied by significant ethical challenges that warrant our immediate attention. Issues such as algorithmic bias, data privacy, and the potential for misuse highlight the urgent need for robust ethical frameworks and governance structures.

What is AI Ethics and Governance?

AI ethics and governance refer to the principles and frameworks that guide the responsible development and use of artificial intelligence technologies. AI ethics focuses on ensuring fairness, accountability, transparency, and respect for human rights in AI systems. It addresses issues like algorithmic bias and data privacy.

As AI systems increasingly influence decision-making processes and societal norms, the importance of ethics in their development cannot be overstated. Responsible AI development is essential not only to mitigate risks but also to foster public trust and ensure equitable outcomes. This blog will explore the principles and practices necessary for building responsible AI systems, emphasizing the critical role of ethics and governance in navigating the complexities of this transformative technology. By establishing a foundation for ethical AI, we can harness its potential while safeguarding against its inherent risks.

The Current State of AI

The rapid development of artificial intelligence (AI) technologies has ushered in a transformative era, with innovations such as generative pre-trained transformers (GPTs), autonomous systems, and facial recognition reshaping various industries. These advancements have enhanced efficiencies and opened new possibilities, but they also introduce significant ethical dilemmas.

Examples of Key Advancements

Generative Pre-trained Transformers (GPTs)

GPTs represent a significant leap in natural language processing, enabling machines to generate human-like text based on context.

Autonomous Systems

Autonomous systems, including self-driving vehicles and drones, utilize AI to perform tasks with minimal human intervention. These systems rely on advanced machine learning algorithms and sensor technologies such as LiDAR and radar to navigate complex environments safely.

Facial Recognition

Facial recognition technology has seen widespread adoption in security and surveillance applications. By employing deep learning algorithms, these systems can identify individuals with high accuracy.

Ethical Concerns

Bias and Fairness

AI algorithms often reflect the biases present in their training data, leading to unfair outcomes in applications like hiring or criminal justice. Ensuring fairness requires rigorous testing and validation processes to mitigate bias.

Privacy Invasion

The extensive data collection necessary for AI functionality poses significant privacy risks. Individuals may unknowingly consent to the use of their data for AI training, raising questions about informed consent and data ownership.

Accountability for AI Decisions

As AI systems become more autonomous, determining accountability for decisions made by these systems becomes increasingly complex. The lack of transparency in many AI models complicates efforts to assign responsibility when errors occur or harm is caused.

Governance Gaps

Compounding these challenges is a noticeable gap in governance mechanisms. As AI technologies evolve at an unprecedented pace, existing regulatory frameworks struggle to keep up, leaving developers and organizations to navigate ethical considerations largely on their own.

Key pointers include:

- Establishing Clear Regulatory Framework

- Promoting Ethical Standards

- Enhancing Stakeholder Collaboration

- Implementing Continuous Monitoring

- Investing in Education and Training

Key Ethical Issues in AI

As AI technologies continue to evolve, several ethical challenges arise, each with profound implications for society. Addressing these issues is crucial to ensure that AI systems are developed and deployed responsibly.

Bias and Fairness

Algorithmic bias occurs when AI systems produce unfair or discriminatory outcomes due to flawed data or biased algorithms. For instance, Amazon’s hiring algorithm was trained on resumes predominantly submitted by male candidates, leading it to favor male applicants and penalize resumes mentioning “women’s” activities, such as “women’s chess club captain.” Another notable example is the COMPAS algorithm used in the U.S. judicial system, which predicted recidivism rates but disproportionately labeled Black defendants as higher-risk compared to their white counterparts, leading to significant disparities in sentencing. These instances highlight the urgent need for rigorous testing and validation processes to mitigate bias in AI systems.

Transparency and Explainability

The black-box nature of many AI systems complicates the understanding of how decisions are made. This lack of transparency can erode trust among users and stakeholders. Explainable AI (XAI) aims to make AI systems more interpretable by providing insights into their decision-making processes. Building trust through transparency is essential, especially in high-stakes applications such as healthcare and criminal justice, where understanding the rationale behind decisions can significantly impact lives.

Data Privacy and Security

The use of personal data in training AI models raises significant privacy concerns. Many AI systems rely on vast amounts of data, often collected without explicit consent from individuals. Compliance with global privacy laws such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) is critical to safeguard user data and maintain public trust. Organizations must implement robust data governance practices to mitigate risks associated with data breaches and unauthorized access.

Autonomy and Accountability

Determining accountability for errors made by AI systems presents a complex challenge. As these systems become more autonomous, establishing who is responsible for their decisions—be it developers, organizations, or the AI itself—becomes increasingly difficult. This issue is particularly pressing in contexts like autonomous weapons, where ethical considerations around life-and-death decisions must be addressed. The potential for misuse or unintended consequences necessitates a thorough examination of accountability frameworks to ensure ethical compliance in AI deployment.

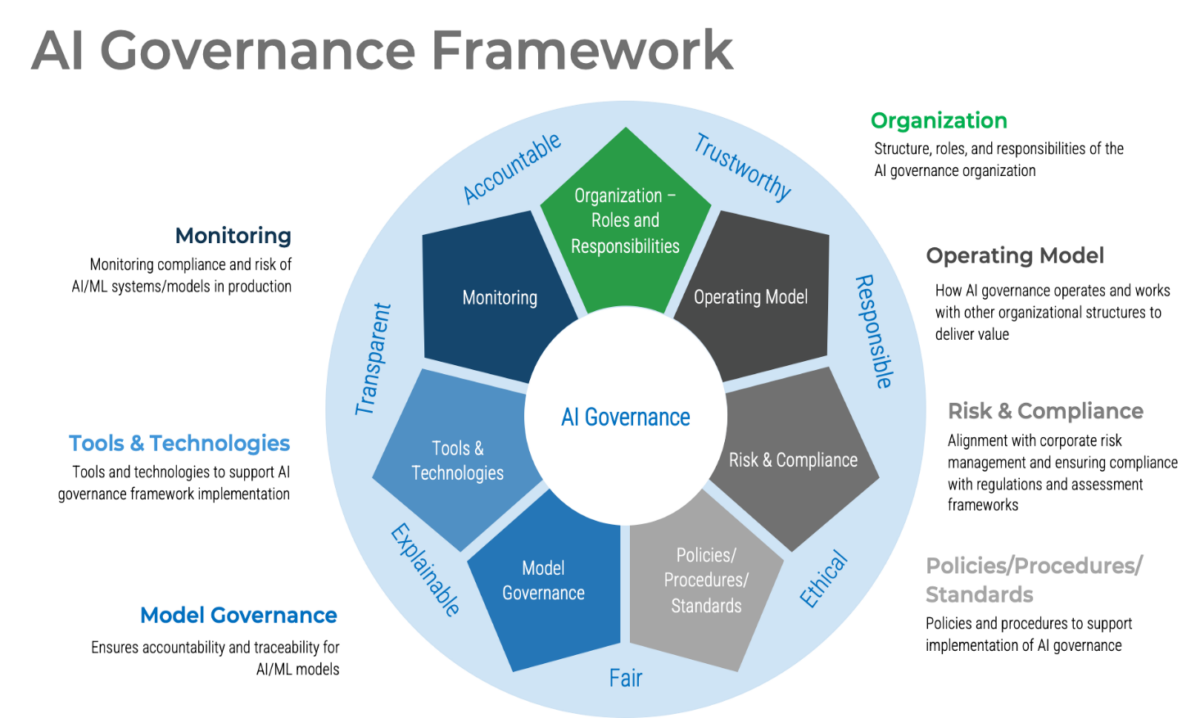

Governance Frameworks for Responsible AI

Establishing effective governance frameworks for artificial intelligence (AI) is critical to ensuring ethical development and deployment. These frameworks provide structured approaches to navigate the complexities of AI ethics, compliance, and risk management. Below are key components and considerations for developing robust AI governance frameworks, incorporating insights from recent discussions on responsible AI governance.

International Efforts

- UNESCO Initiatives: Promotes global standards for AI ethics, focusing on transparency, accountability, and human rights.

- EU AI Act: Aims to regulate high-risk AI applications, ensuring compliance with safety and ethical standards before deployment.

- OECD AI Principles: Establishes principles emphasizing responsible stewardship of AI technologies, including fairness and transparency.

Global Examples of AI Governance

- GDPR (European Union): A landmark regulation that sets strict guidelines for data protection and privacy, impacting how AI systems handle personal data.

- OECD AI Principles: Adopted by over 40 countries, these principles emphasize transparency, fairness, and accountability in AI systems.

- China’s Algorithm Registry: Requires companies to register their algorithms with the government, promoting transparency and accountability in AI applications.

- U.S. Executive Order on AI: Issued in October 2023, this order aims to create safeguards for the ethical development of AI technologies.

- EU AI Act: Set to take effect in 2024, this legislation regulates high-risk AI systems to ensure they meet safety and ethical standards.

- Global Partnership on AI (GPAI): Established by 15 countries to promote the ethical adoption of AI through collaboration among governments, industry, and academia.

Corporate Initiatives

- Google’s AI Principles: Guides the ethical development of AI applications by prioritizing safety, privacy, and fairness.

- Microsoft’s Responsible AI Initiatives: Implements governance structures that include ethical guidelines and internal review boards for oversight.

- AI Governance Alliance: Serves as the apex body for decision-making in organizations, aligning AI goals with business objectives and ensuring compliance with regulations.

Impact on India and Initiatives

India’s diverse demographic and socio-economic landscape presents both opportunities and challenges for AI governance. As AI systems become increasingly integrated into critical sectors such as healthcare, finance, and education, there is a pressing need to establish robust governance frameworks that address issues like algorithmic bias, data privacy, and accountability. Without effective governance, AI technologies could exacerbate existing inequalities and pose risks to individual rights.

Several initiatives illustrate India’s commitment to developing a responsible AI ecosystem:

- National Strategy for Artificial Intelligence: Launched by NITI Aayog, this strategy aims to position India as a global leader in AI while ensuring ethical considerations are at the forefront of AI development.

- AI for All: This initiative focuses on democratizing AI by making it accessible to various sectors, including agriculture and healthcare.

- Digital India Act: Proposed legislation aimed at regulating digital technologies, including AI.

- AI in Healthcare: The Indian government has implemented AI-driven solutions in healthcare, such as using machine learning algorithms for early disease detection and diagnosis.

- Smart Cities Mission: This initiative incorporates AI technologies for urban planning and management. Effective governance is essential to prevent misuse of surveillance technologies and ensure citizen privacy.

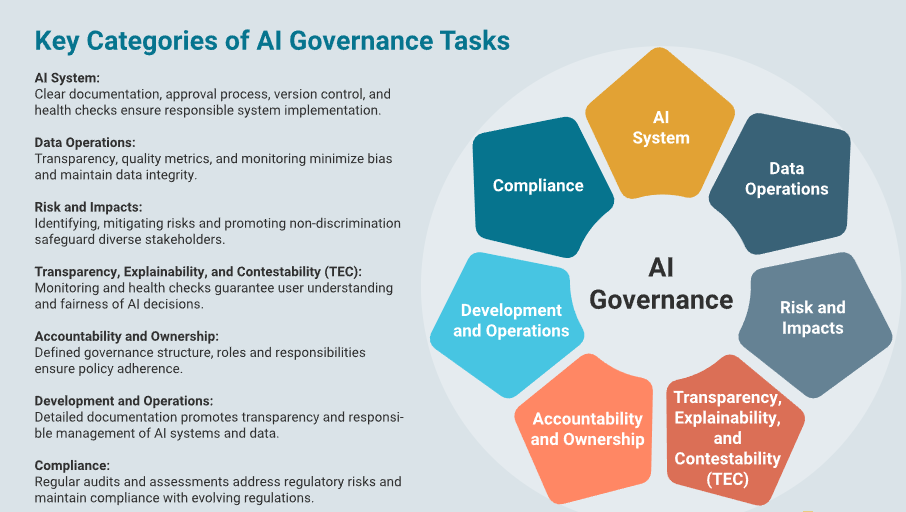

Key Components of AI Governance

AI System Management

- Detailed Documentation: Document each system’s targeted use cases, technical architecture, potential risks, and expected impacts on diverse stakeholders (e.g., students, teachers, parents). Transparency builds trust and allows for scrutiny.

- Clear Approval Process: Create a structured approval process for new AI systems that considers ethical implications, alignment with learning objectives, and potential risks.

- Version Control and Health Checks: Implement strict version control and conduct regular health checks to ensure performance, security, and adherence to initial goals.

Data Operations

- Transparency in Data Handling: Document all data preprocessing steps, quality assurance procedures, and sourcing methods to foster trust and allow for scrutiny.

- Data Quality Metrics: Define and monitor relevant data quality metrics like completeness, accuracy, and representativeness to minimize biases in outputs.

- Data Quality Monitoring: Establish continuous data quality monitoring processes and regular health checks to identify and address issues promptly.

Risk Assessment

- Identifying and Mitigating Risks: Conduct thorough risk assessments for each AI system to document potential harms and impacts on different stakeholders (e.g., students, teachers).

- Non-Discrimination Assurance: Implement processes to ensure non-discrimination and fairness in AI systems through bias detection and mitigation strategies.

Transparency, Explainability, and Contestability (TEC)

- TEC Expectation Canvassing: Engage with identified stakeholders to understand their expectations regarding transparency, explainability, and contestability of AI systems.

- TEC Monitoring: Design a monitoring process to assess the transparency, explainability, and contestability of AI systems used in education.

- TEC Health Checks: Conduct regular health checks to gather feedback on the understandability and fairness of AI decisions.

Accountability and Ownership

- Clearly Defined Roles: Assign clear roles and responsibilities for each governance task within the organization to ensure accountability.

- Accountability Mechanisms: Establish mechanisms such as regular reporting or internal audits to ensure adherence to ethical principles.

Development and Operations

- Development Process Documentation: Thoroughly document the development process for each AI system, including design choices and training procedures.

- Operations Monitoring Documentation: Document all operational procedures for AI systems to ensure transparency in management.

- Retirement Process: Establish a clear process for retiring AI systems safely while ensuring responsible data disposal.

Compliance

- Regular Audits: Conduct internal and external audits to assess compliance with ethical guidelines and data privacy regulations.

- Regulatory Risk Assessment: Evaluate potential regulatory risks associated with AI use in education to ensure compliance with evolving regulations.

- Staying Up-to-Date: Keep current with evolving regulations on AI and data privacy, adapting governance practices accordingly.

By adopting these frameworks and addressing associated challenges, organizations can effectively navigate the complexities of AI governance while maximizing the benefits of this transformative technology. Responsible governance not only enhances trust among users but also promotes accountability and fairness in the rapidly evolving landscape of artificial intelligence.

Building Responsible AI Systems: Best Practices

Here are actionable steps to guide organizations in building responsible AI systems, along with real-world examples that illustrate these principles.

Ethical AI Design Principles

- Fairness: Ensure AI systems treat all individuals equitably, avoiding discrimination based on race, gender, age, or other protected attributes. For instance, Pymetrics uses neuroscience-based games to match candidates’ traits with job profiles, promoting a bias-free recruitment process.

- Accountability: Establish clear lines of responsibility for AI outcomes. State Farm has implemented a governance system for its Dynamic Vehicle Assessment Model (DVAM), allowing for transparent decision-making in claims processing.

- Transparency: Design AI systems that are understandable and interpretable. OpenAI emphasizes transparency in its ChatGPT usage policies by informing users about the AI’s involvement in sensitive areas like healthcare and finance.

Diverse and Inclusive Datasets

- Importance of Inclusive Data Representation: Utilize diverse datasets that accurately represent the populations affected by AI systems. For example, IBM Watson Health analyzes vast medical data while ensuring patient privacy to improve disease diagnosis.

- Bias Mitigation Strategies: Implement techniques such as data augmentation and adversarial training to reduce bias in datasets. Meta has launched projects focusing on algorithmic fairness to enhance bias-free models.

Explainable AI

- Interpretable Models: Develop models that provide clear explanations for their predictions. Tools like LIME and SHAP enhance model interpretability across various sectors. For example, Mind Foundry partnered with the Scottish Government to build an explainable AI system that allows users of varying technical experience to understand decision-making processes.

- User-Centric Communication: Ensure explanations are tailored to the audience’s level of understanding. Microsoft’s Responsible AI initiatives include guidelines for communicating model decisions clearly to users.

Human-in-the-Loop (HITL) Systems

- Incorporating Human Oversight: Design AI systems that include human judgment in critical decision-making processes. Customer service platforms like Convin utilize HITL approaches to enhance interactions while ensuring fairness.

- Feedback Mechanisms: Establish channels for human feedback to continuously improve AI models based on real-world performance and user experiences.

Regular Audits and Monitoring

- Continuous Evaluation: Implement regular audits of AI systems to assess compliance with ethical standards. Organizations like Deloitte promote continuous monitoring of AI technologies to ensure they adhere to responsible practices.

- Performance Metrics: Develop key performance indicators (KPIs) reflecting ethical considerations, ensuring models are evaluated on accuracy as well as fairness.

Stakeholder Collaboration

- Engaging Diverse Stakeholders: Involve policymakers, developers, ethicists, and end-users in the development process. Initiatives like the AI Ethics Advisory Board at Google exemplify collaborative efforts to address ethical concerns in technology deployment.

- Community Involvement: Foster community engagement through public consultations and feedback sessions to gather insights on societal impacts related to AI deployment.

Future Outlook and Challenges

As we look to the future of AI ethics and governance, several emerging trends and potential challenges are on the horizon. The rapid evolution of technologies such as generative AI and autonomous agents presents both opportunities and ethical dilemmas that require careful consideration.

Emerging Trends in AI

- Generative AI: This technology is transforming industries by enabling the creation of content, designs, and even synthetic media. However, its rise has also led to concerns about misinformation and the potential for misuse, such as deepfakes that can manipulate public perception.

- Autonomous Agents: As AI systems become more autonomous, they will increasingly make decisions without human intervention. This raises questions about accountability and the ethical implications of allowing machines to operate independently in critical areas like healthcare and transportation.

Potential Ethical Challenges

- Deepfakes: The ability to create hyper-realistic videos and audio using generative AI poses significant risks, including misinformation campaigns and erosion of trust in media. Addressing these challenges will require robust detection mechanisms and regulatory frameworks.

- Synthetic Biology: The intersection of AI with synthetic biology raises ethical questions about genetic manipulation and bioengineering. Ensuring responsible innovation in this field will necessitate comprehensive governance that considers societal impacts.

Conclusion

To effectively manage these evolving technologies, there is an urgent need for adaptive governance models that can keep pace with technological advancements. Current regulatory frameworks often lag behind innovation, creating gaps that can be exploited. As highlighted by experts, organizations must proactively establish governance mechanisms that are flexible enough to accommodate rapid changes while ensuring ethical compliance.

The future of AI ethics and governance will depend on our ability to anticipate challenges and implement adaptive strategies that promote responsible innovation. By fostering collaboration among stakeholders—policymakers, technologists, ethicists, and the public—we can navigate the complexities of AI development while safeguarding societal values.

FAQs

What is AI ethics and governance?

AI ethics and governance refer to the principles, policies, and regulations that guide the development and use of artificial intelligence technologies. They focus on ensuring fairness, accountability, transparency, and respect for human rights in AI systems to prevent misuse and protect individuals.

What is ethical AI and responsible AI development?

Ethical AI involves designing and deploying AI systems that prioritize fairness, transparency, and accountability. Responsible AI development means creating these systems with a commitment to ethical standards, ensuring they benefit society while minimizing risks such as bias and privacy violations.

How is AI changing the landscape?

AI is transforming various industries by automating processes, enhancing decision-making, and improving efficiency. It is reshaping sectors like healthcare, finance, transportation, and education by enabling predictive analytics, personalized services, and smarter resource management.

What is responsible AI and AI governance?

Responsible AI refers to the practice of developing AI systems that are ethical, fair, and accountable. AI governance encompasses the frameworks and regulations that ensure these systems operate within established ethical guidelines and legal standards to protect users’ rights and promote public trust.

How is AI used in governance?

AI is used in governance to enhance decision-making processes, improve public services, and increase efficiency. Applications include data analysis for policy development, predictive analytics for resource allocation, and automated systems for managing public safety or health services.